A Living Laboratory

Ask many students why they’re at the University of Utah and they’ll tell you they want to make an impact on the world. Maybe it’s medicine, social work, or realizing the next best engineering feat. Maybe their impact lies in the arts, architecture or the humanities. Then there’s politics, management or public health . . . to name a few.

There are a growing number of students looking at climate change and the environment with an urgent sense of purpose and a belief that they can make a difference.

Are you one of them?

The College of Science is offering a new major in Earth & Environmental Science (EES) in fall 2023. EES is an interdisciplinary degree that enables students to study the interconnected nature of earth systems, including the fields of atmospheric science, geology, and ecology. Students with this degree will gain the education and experience to make an impact on the challenges facing our planet.

Living laboratory

So, you’re set to train to make a difference in this world. What is your laboratory going to look like?

Students who declare their EES major will engage with the living laboratory surrounding the university–studying forest ecology in the Wasatch Mountains, geology in the midst of Utah’s national parks and climate science from the top of Utah’s world-famous ski resorts.

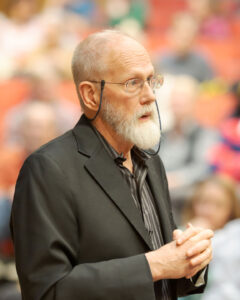

A critical part of learning about earth systems is to experience those systems firsthand. “The ability to have our incredible landscapes as our living laboratory, it’s an amazing strength of the University of Utah,” said William Anderegg, associate professor of biology. Anderegg, who is also director of the Wilkes Center for Climate Science & Policy at the U, played an important role in the creation of the new major as part of a multidisciplinary team. “Utah’s geography, combined with our powerful research make the U one of the best places in the world to study environmental science.”

As an EES student, you will engage with the natural beauty of Utah while working on environmental challenges that face the state and our region. This balance of coursework both in a laboratory and in the field will prepare you for career opportunities in a wide variety of growing sectors, from environmental consulting to land management, and from conservation to corporate stewardship.

Transformational experience

The new Earth & Environmental Science major will focus on providing students with transformational experiential learning opportunities. First-year students will start their studies as part of the Science Research Initiative, where they will join a research lab during their first year on campus–no experience required. After a community-building class providing an introduction to university research, students will be paired in a “research stream” with faculty and a group of peers to experience the challenge and opportunities with research–either in the lab or in the field.

EES has a broad appeal and welcomes existing U students already pursuing science and earth science degrees, and transfer students to the U interested in climate science/environmental science education. Current students transferring into the major have the option to use previous research experience for the SRI requirement.

Peter Trapa, dean of the College of Science, believes that EES will not only appeal to a new generation of students at the U, but that it provides a blueprint for other interdisciplinary programs on campus. “The new Earth and Environmental Science degree is meeting surging student and employer demand for quantitative expertise in environmental science,” said Trapa. “Thanks to the merger between the College of Science and the College of Mines and Earth Sciences, the U can deliver new world-class educational pathways to understand the science of earth’s integrated systems that lie at the heart of addressing future environmental challenges.”

The U offers two undergraduate majors that offer an interdisciplinary approach to studying the environment: Earth & Environmental Science and Environmental and Sustainability Studies (ENVST). ENVST strives to foster an understanding of ecological systems and the consequences of human-environment interactions, using a science-based focus to arrive at solutions and integrated problem solving from earth systems science, the humanities and social and behavioral sciences.

The new EES major, on the other hand, is focused on quantitative reasoning and thinking. It requires students to enroll in the science core classes, similar to most degree programs in the College of Science. Three emphases in climate science, geoscience, and ecosystem science will tailor students’ coursework to their interests, with plenty of space in schedules to add electives and supplemental coursework from different disciplines.

Advisors can help students decide which degree is right for them. Motivated students can double-major in both programs, or receive a Sustainability Certificate to add to their credentials.

Close to Home

Ainsley Nystrom

Ainsley Nystrom, a sophomore and College of Science ambassador, is excited about the possibility of declaring her EES major, which promises to streamline her current (multiple) major and minors into one degree. “I stand by the fact that climate science doesn’t just have one aspect,” she said, “and that every aspect … is very interconnected.”

A researcher in the Anderegg Lab, Nystrom studies wildfire as it relates to forest health and drought which, for her, strikes close to home. She remembers the year before she came to the U when she had to initiate an evacuation with her two younger sisters due to a threatening brush fire near their home north of Phoenix. The whys and the wherefores of that frightening scenario were complex, and different aspects that were nevertheless interrelated. And while Nystrom understands that scientists must narrow their research, the new major’s interdisciplinary approach—from atmospheric sciences to chemistry, from biology to geology and from mathematics to physics—will allow her to see how her area of study is impacted by others in living laboratories, and in what way.

“I didn’t know how big of a field environmental science was until I came to the U,” Nystrom concluded. But she knows now, and the new Earth & Environmental Science major is customized to prime her for a long career as a researcher determined to make a difference.

Course plans available now.

Visit science.utah.edu/ees for more information.

By David Pace, originally published @theU.